During a VMWare NSX training class a while back, a question was raised to the instructor concerning the throughput that is possible on a NSX distributed switch or router (two components that are crucial to the NSX platform and must handle all VM traffic. If you are unfamiliar with their roles, check out this video).

The instructor simply replied with the all too popular term “line-rate”, which seemed to be reassuring and quench the classes concern, but that answer sparked quite a bit of apprehension for me.

To explain the concern, we need to understand the role of Application Specific Integrated Circuits (ASICs), specifically, Content Addressable Memory (CAM) in modern networking equipment.

Intro to CAM

CAM circuits are critical to contemporary routers and switches due to the latency and bandwidth requirements put upon those devices. A simple layer-2 switch will use CAM to perform high-speed mac address lookups against its forwarding table so that it can find the appropriate egress interface for the Ethernet frame in question. The reason CAM is used for these lookups (instead of having the CPU parse the forwarding table in RAM) is because CAM has the ability to perform a lookup of its entire data table in a single clock cycle (regardless of how many entries in the table). If this operation was performed by a CPU, then the CPU would have to parse through each entry in the forwarding table (which would be stored in RAM) one by one until it finds a match. This could require hundreds or even thousands of clock cycles by the CPU before a match can be found for a single frame, depending on the size of the forwarding table.

BCAM and TCAM

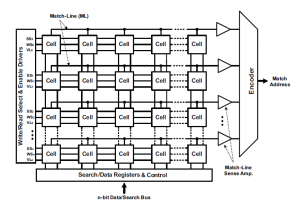

Binary CAM tables consist of rows of memory cells. Each cell contains either a 1 or a 0 (hence the “binary”), and contains enough cells to store the searched word (ie: MAC address) and a few related words (like an egress port and VLAN ID). When the circuit performs a lookup against these rows, there can only be a single match, as the data in each row is unique.

Ternary CAM operates much like Binary CAM with one exception: A cell can contain a “1” or a “0” or a “don’t care”. The part of a memory row which contains a “don’t care” will always return a match during a search operation. This “don’t care” feature of TCAM is what makes the lookup of a longest prefix possible. It allows a specific IP address to be entered as the search word and will return the most specific match without having to make an exact match (as is necessary in BCAM).

Can’t We Just Do This in Software?

The purpose of BCAM and TCAM in routers and switches is to maintain a consistent and rapid next step lookup for packets and frames no matter how many entries are in the memory tables. This is required to ensure low latency forwarding from hop to hop and is even more important when you have multiple lookup operations per packet (ie: firewalls need to process against ACLs, routes, NAT, etc when processing packets; the removal of hardware acceleration from a firewall can cause an exponential decrease in performance). CAM was invented to replace process (software) switching and routing because software couldn’t maintain an acceptable throughput when memory tables began to grow (check out this PDF that shows how much the throughput of a router will decrease when it no longer uses hardware-based forwarding). Removing the benefit of hardware assisted forwarding while still expecting the same throughput and latency required by a modern datacenter may end up as a disappointment to some.

Some of the SDN solutions that promise the moon may be putting us back into the software-based routing and switching which was determined to be too slow a few decades ago (when the bandwidth we use today was considered science fiction). Although SDN may give us some increased flexibility in our infrastructure, we may be taking a step backwards in performance. Only time will tell…

Be the first to comment on "SDN – Is Software Fast Enough"